See the project’s description in French, Ukranian, Polish, German and Romanian.

Norms of Assertion in linguistic Human-AI Interaction (NIHAI)

One of the central crises of our times regards the widespread dissemination of mis- and disinformation, fake news, conspiracy theories and consequently the erosion of trust in the media, science and governmental institutions. The communication crisis, as we will call it, has been exacerbated by the use of digital means of discourse. And it will be further intensified, because in the near future many of our interlocutors will no longer be humans, but AI-driven conversational agents. We propose an in-depth, cross-cultural inquiry into responsible principles of operation for AI-driven conversational agents, so as to help mitigate or prevent a new AI-fueled wave of the communication crisis.

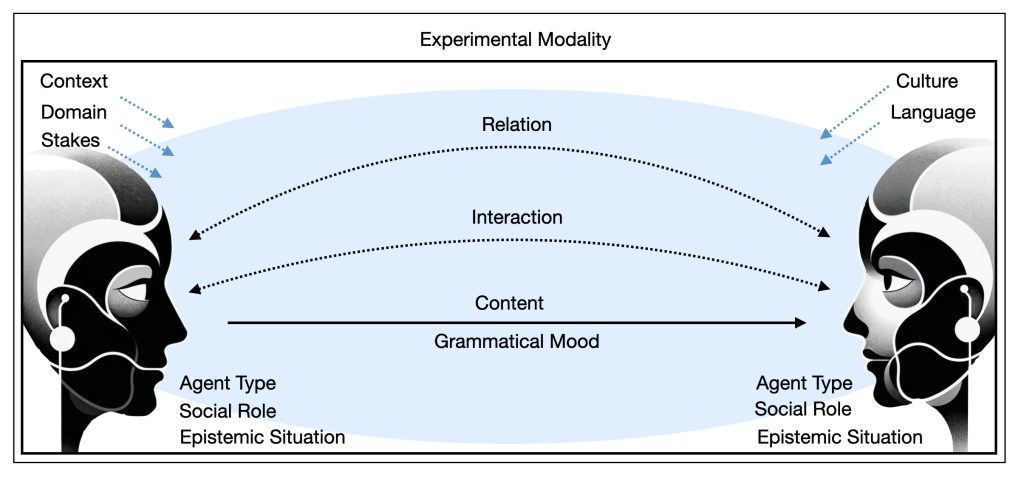

The research endeavour, which sits at the interface of ethics, linguistics, media and communication as well as social computer science, will empirically explore (i) normative expectations in language-based human-AI interaction as well as (ii) the appraisal of norm violations and their downstream interactive consequences across several European and non-European languages and cultures. We will closely (iii) track relevant moderators (e.g. context, role, model complexity of the AI system, stakes) and mediators (e.g. perceived agency, projection of mind), so as to (iv) investigate their influence on the evaluation of and disposition to rely on conversational AI systems across cultures. On the basis of the findings, we will (v) propose principles for the responsible design and use of AI-driven conversational agents, which will be tested immediately on the AI-driven lay-journalism application Reporter provided by our associated partner Polaris News.

The envisioned research is funded with over 1 Mio Euros from the ERC Chanse/Hera Program.

The project will be conducted by Prof. Markus Kneer (project leader), University of Graz, PD Dr. Markus Christen (PI), University of Zurich, Prof. Mihaela Constantinescu (PI), University of Bucharest, Prof. Izabela Skoczen (PI), Jagiellonian University. Polaris News, led by award-winning journalist Hannes Grassegger, is a key collaborator.

Further Reading:

Kneer, M. (2021). Norms of assertion in the United States, Germany, and Japan. Proceedings of the National Academy of Sciences, 118(37), e2105365118.

Kneer, M. (2018). The norm of assertion: Empirical data. Cognition, 177, 165-171.

Stuart, M. T., & Kneer, M. (2021). Guilty artificial minds: Folk attributions of mens rea and culpability to artificially intelligent agents. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2), 1-27.

Dyrda A., Skoczen I., Tuzet G. (2023). Testimony: Knowledge and Garbage. Ars Interpretandi, 28(2), 75-92.

Skoczeń I., Smywiński-Pohl A. (2022). The context of mistrust: perjury ascriptions in the courtroom in From lying to perjury, Foundations in Law and Language, ed. L. R. Horn, De Gruyter

Skoczeń I. (2021). Modelling Perjury: between Trust and Blame, Int. J. for the Semiotics of Law

Skoczeń I., Smywiński-Pohl A. (2021). Numeral Terms and the Predictive Potential of Bayesian Updating,Intercultural Pragmatics 18(3), 359-290.

Constantinescu, M. & Crisp, R. (2022). Can robotic AI systems be virtuous and why does this matter? International Journal of Social Robotics, 14, 1547–1557.

Constantinescu, M., Vică, C., Uszkai, R. & Voinea, C. (2022). Blame it on the AI? On the moral responsibility of Artificial Moral Advisors. Philosophy & Technology, 35(2), 1-26.

Constantinescu, M., Uszkai, R., Vică, C. & Voinea, C. (2022). Children-Robot Friendship, Moral Agency, and Aristotelian Virtue Development. Frontiers in Robotics and AI, 9:818489.

Constantinescu, M., Voinea, C., Uszkai, R. & Vică, C. (2021). Understanding responsibility in Responsible AI. Dianoetic virtues and the hard problem of context. Ethics and Information Technology, 23, 803-814.

Constantinescu, M., Crisp, R. (2022). Can Robotic AI Systems Be Virtuous and Why Does This Matter?. International Journal of Social Robotics 14, 1547–1557 (2022). https://doi.org/10.1007/s12369-022-00887-w